Install the new bare metal server(s) for production elk cluster

Installation procedure: https://docs.softwareheritage.org/sysadm/server-architecture/howto-install-new-physical-server.html

Inventory:

- esnode8: https://inventory.internal.admin.swh.network/dcim/devices/288/

- esnode9: https://inventory.internal.admin.swh.network/dcim/devices/289/

Environment: production

Summary:

-

esnode8

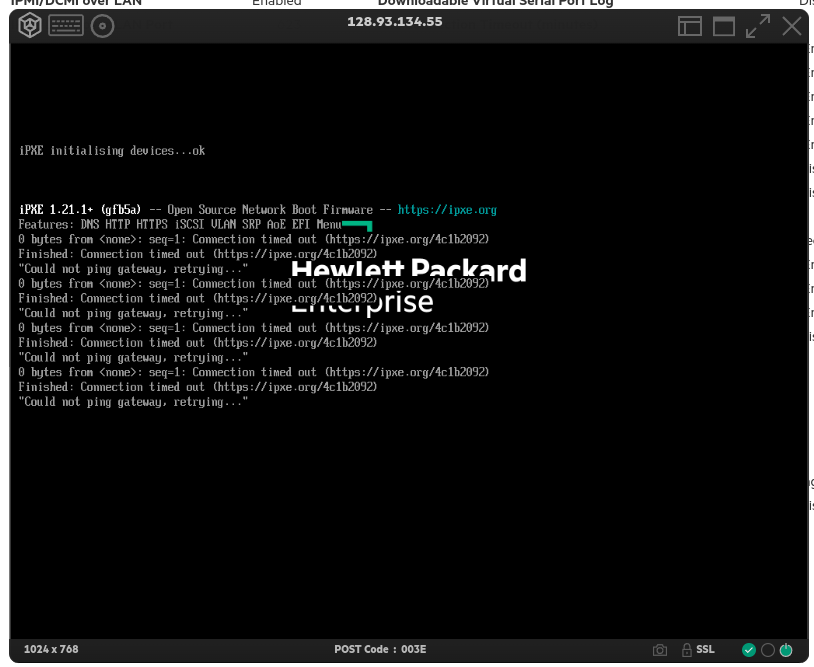

- Management address (DNS): 128.93.134.55 (N/A)

- VLAN configuration: VLAN440

- Internal IP(s): 192.168.100.65

- Internal DNS name(s): esnode8.internal.softwareheritage.org

-

esnode9

- Management address (DNS): 128.93.134.56 (N/A)

- VLAN configuration: VLAN440

- Internal IP(s): 192.168.100.66

- Internal DNS name(s): esnode9.internal.softwareheritage.org

For each node:

- Declare the servers in the inventory

- Add the management info in the credential store

- Install the OS

- (if needed) Add puppet configuration

- Run puppet so it installs the required software with the es profile

- Adapt the zfs configuration accordingly (if puppet can't do it already)

-

Create a swap at least the size of the machine's memory -

Update firewall rules with the new machine's ip (e.g. swh_$environment_kube_workers, ...) -

(other actions if needed, drop unneeded actions)

- Tag(s) grafana

- Update passwords in credential store

Activity

-

Newest first Oldest first

-

Show all activity Show comments only Show history only

- Antoine R. Dumont added activity::Deployment label

added activity::Deployment label

- Antoine R. Dumont changed the description

changed the description

- Owner

We need to ask for the access port configuration. it's usually done when we request the installation in a ticket for the DSI. This time, the servers were installed by ourselves so the request was not made.

- Author Owner

- Antoine R. Dumont mentioned in commit ipxe@c3014cbf

mentioned in commit ipxe@c3014cbf

- Author Owner

[stand-bye] we need to request the access port configuration to the dsi and vince will do it tomorrow.

- Antoine R. Dumont changed the description

changed the description

- Antoine R. Dumont mentioned in commit c727d12b

mentioned in commit c727d12b

- Antoine R. Dumont mentioned in commit ipxe@60105032

mentioned in commit ipxe@60105032

- Antoine R. Dumont mentioned in commit ipxe@18cc6460

mentioned in commit ipxe@18cc6460

- Author Owner

The esnode8.yaml and esnode9.yaml configuration file are pushed in the repository ipxe btw. So if someone takes over, remains to build the iso and start the install.

- Antoine R. Dumont mentioned in commit ipxe@dcf7a9cd

mentioned in commit ipxe@dcf7a9cd

- Antoine R. Dumont mentioned in commit ipxe@ac4a31ad

mentioned in commit ipxe@ac4a31ad

- Antoine R. Dumont mentioned in commit ipxe@38e6945b

mentioned in commit ipxe@38e6945b

- Antoine R. Dumont mentioned in commit ipxe@306b101a

mentioned in commit ipxe@306b101a

- Author Owner

The os got installed on both machine as bullseye though (only seen after the install).

I updated the template to default to bookworm so the next install should be with bookworm.

I've dist-upgraded the machines to bookworm.

Remains to configure the machines so they can join the es cluster.

- Antoine R. Dumont mentioned in commit swh/infra/puppet/puppet-swh-site@6d3d64d6

mentioned in commit swh/infra/puppet/puppet-swh-site@6d3d64d6

- Antoine R. Dumont mentioned in commit swh/infra/puppet/puppet-swh-site@de6a01ac

mentioned in commit swh/infra/puppet/puppet-swh-site@de6a01ac

- Antoine R. Dumont marked the checklist item (if needed) Add puppet configuration as completed

marked the checklist item (if needed) Add puppet configuration as completed

- Antoine R. Dumont marked the checklist item Run puppet so it installs the required software with the es profile as completed

marked the checklist item Run puppet so it installs the required software with the es profile as completed

- Antoine R. Dumont marked the checklist item Adapt the zfs configuration accordingly (if puppet can't do it already) as completed

marked the checklist item Adapt the zfs configuration accordingly (if puppet can't do it already) as completed

- Antoine R. Dumont marked the checklist item Install the OS as completed

marked the checklist item Install the OS as completed

- Antoine R. Dumont changed the description

changed the description

- Author Owner

A few bumps in the road but esnode8 and 9 are joining the es cluster.

The puppet certificate had to be generated from pergamon (like we currently do when we need to renew the puppet certificates from a bookworm machine).

I've added a

/usr/local/bin/puppet5-generate-certificate.sh $HOST_FQDN $HOST_IPscript to ease the generation/signing/copy of the certicates files. It assumes the arborescence is present on the $HOST_FQDN machine (after a faulty puppet agent run for example, it will be ok). It's a basic script for now.The first puppet agent failed so the elastic search sources.list had to be adapted slightly so the first puppet agent run actually goes through without issues.

The zpool manually got prepared [1] [2]. Once the first puppet agent run is done, we've got the necessary tools for that [1]

Some extra elasticsearch configuration had to be adapted so the service is ok to start (details are in the linked commit). (it was complaining about xpack settings not being set).

Some notes:

- megacli is no longer packaged in bookworm so that failed to get installed

- [repeat] we can't just trigger puppet agent the first time on bookworm machine while puppet master remains at puppet 5

- we could probably evolve the elasticsearch role (swh-site) to use profile::zfs::common so we can declare the zpool (and avoid doing the zpool install manually, like we do for rancher metal nodes)

[1]

root@esnode8:~# zpool create elasticsearch nvme-MO003200KYDNC_S70NNT0X604237 nvme-MO003200KYDNC_S70NNT0X604239 nvme-MO003200KYDNC_S70NNT0X604240 nvme-MO003200KYDNC_S70NNT0X604242 root@esnode8:~# zpool status pool: elasticsearch state: ONLINE config: NAME STATE READ WRITE CKSUM elasticsearch-data ONLINE 0 0 0 nvme-MO003200KYDNC_S70NNT0X604237 ONLINE 0 0 0 nvme-MO003200KYDNC_S70NNT0X604239 ONLINE 0 0 0 nvme-MO003200KYDNC_S70NNT0X604240 ONLINE 0 0 0 nvme-MO003200KYDNC_S70NNT0X604242 ONLINE 0 0 0 errors: No known data errors root@esnode8:~# zpool list -v NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT elasticsearch 11.6T 600K 11.6T - - 0% 0% 1.00x ONLINE - nvme-MO003200KYDNC_S70NNT0X604237 2.91T 66K 2.91T - - 0% 0.00% - ONLINE nvme-MO003200KYDNC_S70NNT0X604239 2.91T 174K 2.91T - - 0% 0.00% - ONLINE nvme-MO003200KYDNC_S70NNT0X604240 2.91T 180K 2.91T - - 0% 0.00% - ONLINE nvme-MO003200KYDNC_S70NNT0X604242 2.91T 180K 2.91T - - 0% 0.00% - ONLINE root@esnode8:~# zfs create -o mountpoint=/srv/elasticsearch -o atime=off elasticsearch/data root@esnode8:~# mount | grep elastic elasticsearch/data on /srv/elasticsearch type zfs (rw,noatime,xattr,noacl) root@esnode8:~# zfs list NAME USED AVAIL REFER MOUNTPOINT elasticsearch 214K 11.5T 24K none elasticsearch/data 24K 11.5T 24K /srv/elasticsearch[2]

root@esnode9:~# zpool create elasticsearch nvme-MO003200KYDNC_S70NNT0X604313 nvme-MO003200KYDNC_S70NNT0X604315 nvme-MO003200KYDNC_S70NNT0X604316 nvme-MO003200KYDNC_S70NNT0X604246 root@esnode9:~# zfs list NAME USED AVAIL REFER MOUNTPOINT elasticsearch 118K 11.5T 24K /elasticsearch root@esnode9:~# zfs create -o mountpoint=/srv/elasticsearch -o atime=off elasticsearch/data root@esnode9:~# zfs list NAME USED AVAIL REFER MOUNTPOINT elasticsearch 186K 11.5T 24K /elasticsearch elasticsearch/data 24K 11.5T 24K /srv/elasticsearch root@esnode9:~# mount | grep elastic elasticsearch on /elasticsearch type zfs (rw,xattr,noacl) elasticsearch/data on /srv/elasticsearch type zfs (rw,noatime,xattr,noacl) root@esnode9:~# zfs set mountpoint=none elasticsearch root@esnode9:~# mount | grep elastic elasticsearch/data on /srv/elasticsearch type zfs (rw,noatime,xattr,noacl) - Author Owner

-

Create a swap at least the size of the machine's memory

esnode7 which has also 64g (like esnode8-9) of ram has no swap. esnode[1-3] have swap of 32g which match their ram.

-

Update firewall rules with the new machine's ip (e.g. swh_$environment_kube_workers, ...)

No need it seems we can already request it from our network.

root@pergamon:~# curl http://esnode8.internal.softwareheritage.org:9200 { "name" : "esnode8", "cluster_name" : "swh-logging-prod", "cluster_uuid" : "-pJ9DxzdTIGyqhG-p7fh0Q", "version" : { "number" : "8.15.1", "build_flavor" : "default", "build_type" : "deb", "build_hash" : "253e8544a65ad44581194068936f2a5d57c2c051", "build_date" : "2024-09-02T22:04:47.310170297Z", "build_snapshot" : false, "lucene_version" : "9.11.1", "minimum_wire_compatibility_version" : "7.17.0", "minimum_index_compatibility_version" : "7.0.0" }, "tagline" : "You Know, for Search" } root@pergamon:~# curl http://esnode9.internal.softwareheritage.org:9200 { "name" : "esnode9", "cluster_name" : "swh-logging-prod", "cluster_uuid" : "-pJ9DxzdTIGyqhG-p7fh0Q", "version" : { "number" : "8.15.1", "build_flavor" : "default", "build_type" : "deb", "build_hash" : "253e8544a65ad44581194068936f2a5d57c2c051", "build_date" : "2024-09-02T22:04:47.310170297Z", "build_snapshot" : false, "lucene_version" : "9.11.1", "minimum_wire_compatibility_version" : "7.17.0", "minimum_index_compatibility_version" : "7.0.0" }, "tagline" : "You Know, for Search" } -

- Antoine R. Dumont marked the checklist item

Update firewall rules with the new machine's ip (e.g. swh_$environment_kube_workers, ...)as completedmarked the checklist item

Update firewall rules with the new machine's ip (e.g. swh_$environment_kube_workers, ...)as completed - Antoine R. Dumont changed the description

changed the description

- Author Owner

The new esnode7-8 have fully integrated the cluster.

Let's close this.

root@esnode1:~# server=http://esnode1.internal.softwareheritage.org:9200; date; curl -s $server/_cat/allocation?v\&s=node; echo; curl -s $server/_cluster/health | jq Tue Jan 21 13:45:48 UTC 2025 shards shards.undesired write_load.forecast disk.indices.forecast disk.indices disk.used disk.avail disk.total disk.percent host ip node node.role 638 0 0.0 5.3tb 5.3tb 5.3tb 1.5tb 6.8tb 77 192.168.100.61 192.168.100.61 esnode1 cdfhilmrstw 629 0 0.0 5.5tb 5.5tb 5.5tb 1.3tb 6.8tb 80 192.168.100.62 192.168.100.62 esnode2 cdfhilmrstw 636 0 0.0 5.3tb 5.3tb 5.4tb 1.4tb 6.8tb 78 192.168.100.63 192.168.100.63 esnode3 cdfhilmrstw 644 0 0.0 5.2tb 5.2tb 5.2tb 8.6tb 13.8tb 37 192.168.100.64 192.168.100.64 esnode7 cdfhilmrstw 644 0 0.0 5.2tb 5.2tb 5.2tb 6.2tb 11.4tb 45 192.168.100.65 192.168.100.65 esnode8 cdfhilmrstw 641 0 0.0 5.2tb 5.2tb 5.3tb 6.1tb 11.4tb 46 192.168.100.66 192.168.100.66 esnode9 cdfhilmrstw { "cluster_name": "swh-logging-prod", "status": "green", <- "green, green, super green!" "timed_out": false, "number_of_nodes": 6, <- 4 + the 2 new nodes, 6 good! "number_of_data_nodes": 6, "active_primary_shards": 1916, "active_shards": 3832, "relocating_shards": 0, <- No shards to reassign or "initializing_shards": 0, <- shuffle around, everything "unassigned_shards": 0, <- looks good "delayed_unassigned_shards": 0, <- "number_of_pending_tasks": 0, <- "number_of_in_flight_fetch": 0, "task_max_waiting_in_queue_millis": 0, "active_shards_percent_as_number": 100 } - Antoine R. Dumont closed

closed