Upgrade rancher to 2.8.x

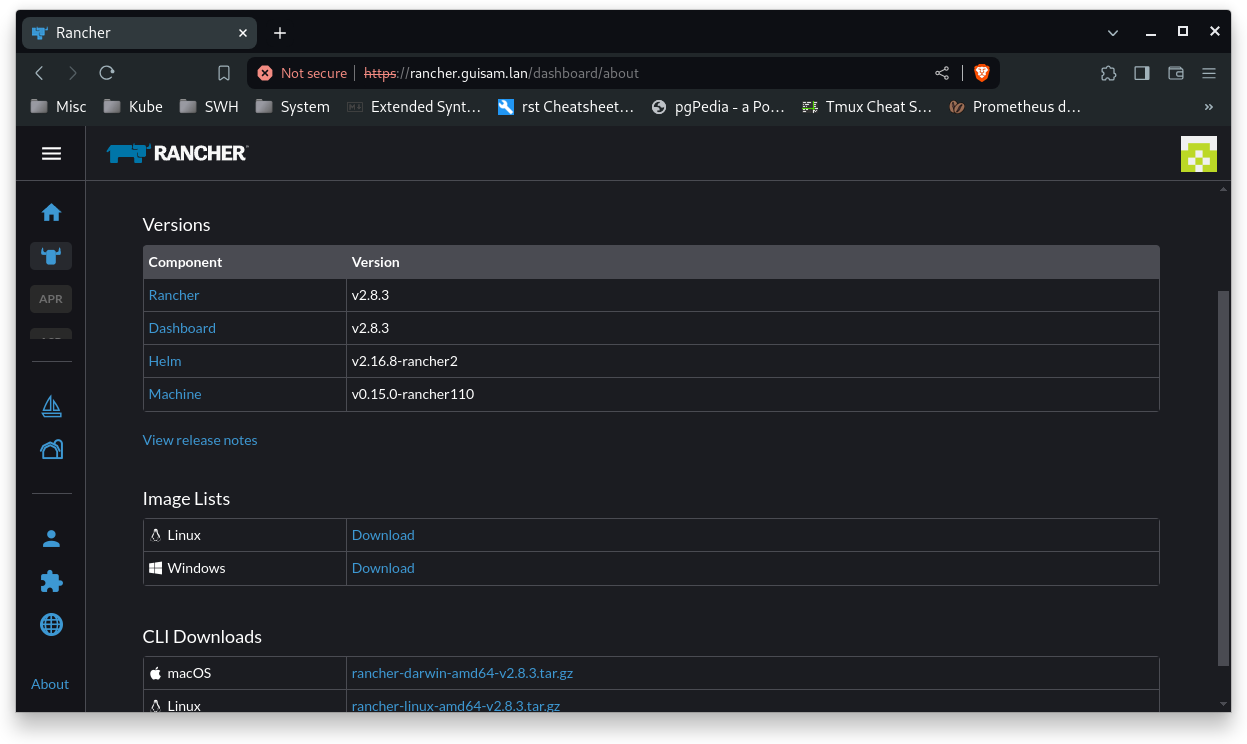

the 2.8 rancher branch is now in 2.8.3 version so we could start to think to upgrade our 2.7.5 version to 2.8.

As usual, the change logs and migration procedures should be checked carefully to detect any potential issue we could encountered.

Designs

- Show closed items

- #5036Dynamic infrastructure [Roadmap - Tooling and infrastructure]

Activity

-

Newest first Oldest first

-

Show all activity Show comments only Show history only

- Vincent Sellier added kubernetes rancher upgrade labels

added kubernetes rancher upgrade labels

- Vincent Sellier marked this issue as related to #4998 (closed)

marked this issue as related to #4998 (closed)

- Vincent Sellier assigned to @vsellier

assigned to @vsellier

- Author Owner

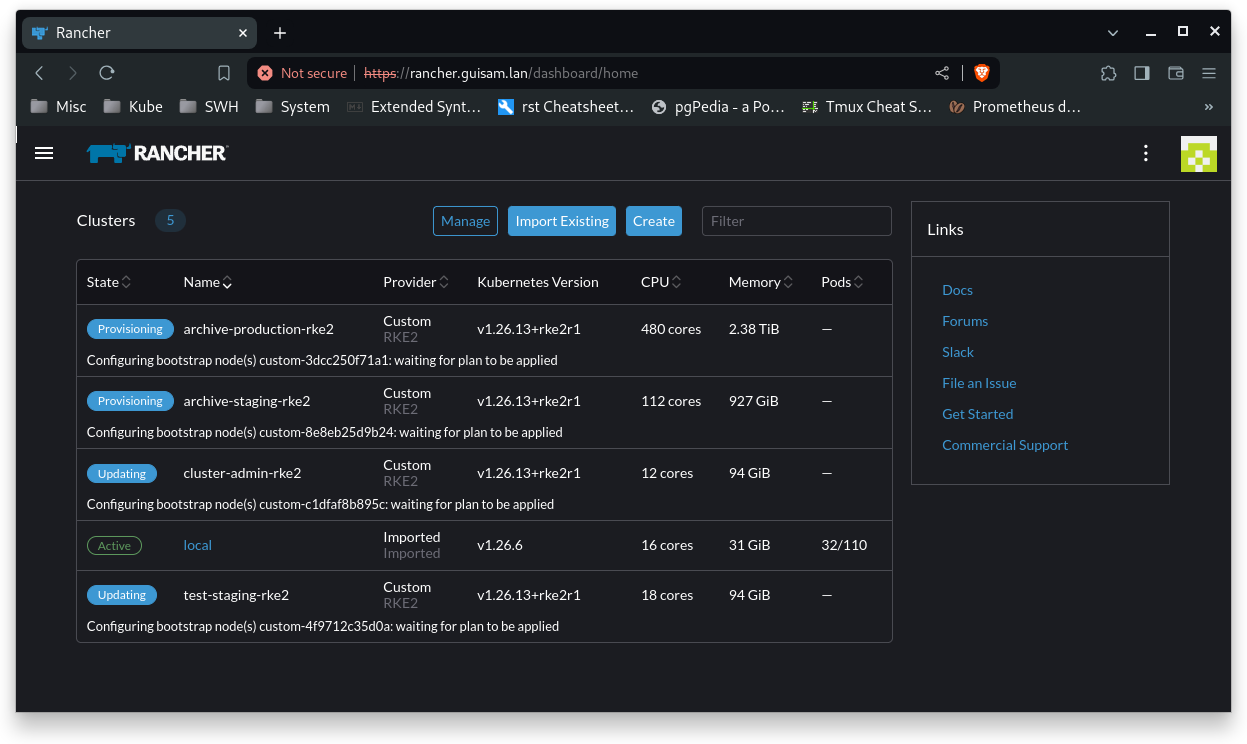

kubernetes compatibility matrix for 2.8.3 is from 1.25 to 1.28 (cluster version is 1.26

Changelog and migration recommandation from 2.7.5 to 2.8.3:

- 2.7.6: Nothing to declare

- 2.7.7: Declared as a do not use (DNU) version

- 2.7.8: Nothing to declare

- 2.7.9: Not available in stable branch

- 2.7.10: during the upgrade all the clusters must be

active - 2.7.11:

- if installed, upgrade must be done to 2.8.3 only

- Add the support of rancher 1.27

- 2.7.12: only for rancher prime customers

- 2.8.0

- Add support of kube 1.27

-

Remove support of kube 1.23 and 1.24 All our clusters are in 1.26+

- during the upgrade all the clusters must be

active

- 2.8.1: minor upgrade from 2.80 for air-gap installation

- 2.8.2: security release

- 2.8.3: Add support for kubernetes 1.28

Edited by Vincent Sellier - Guillaume Samson mentioned in commit swh/infra/ci-cd/k8s-clusters-conf@fcaaca8a

mentioned in commit swh/infra/ci-cd/k8s-clusters-conf@fcaaca8a

- Guillaume Samson mentioned in commit swh/infra/ci-cd/k8s-clusters-conf@e41275a6

mentioned in commit swh/infra/ci-cd/k8s-clusters-conf@e41275a6

- Owner

test rancher update in minikube

commands aliases

ᐅ alias {mk,kb} mk=minikube kb=kubectlstart minikube with kubernetes v1.26.6

ᐅ mk delete ᐅ mk start --insecure-registry "192.168.49.0/24" \ --network-plugin=cni \ --cni=calico \ --ports=80:80,443:443 \ --kubernetes-version v1.26.6 \ --cpus 4 --memory 10000install minikube addons

ᐅ for addon in ingress registry metrics-server; do mk addons enable "$addon";donecreate namespace

cattle-systemand deploy secretrancher-backup-credentialsᐅ kb create namespace cattle-system ᐅ kb apply -f sysadm-environment/k8s-private-data/rancher/rancher-backup-credentials.yamlinstall rancher backups

ᐅ helm repo list | awk '/rancher/' rancher-charts https://charts.rancher.io rancher-stable https://releases.rancher.com/server-charts/stable ᐅ helm repo updateᐅ CHART_VERSION=102.0.1+up3.1.1 ᐅ helm install rancher-backup-crd rancher-charts/rancher-backup-crd \ -n cattle-resources-system --create-namespace --version $CHART_VERSION ᐅ helm install rancher-backup rancher-charts/rancher-backup \ -n cattle-resources-system --version $CHART_VERSION ᐅ kb get pods -n cattle-resources-system NAME READY STATUS RESTARTS AGE rancher-backup-9cd86b8d7-2xwj9 1/1 Running 0 116sdelete bakcup elements that cause restoration errrors

Download a backup from

https://minio-console.internal.admin.swh.network/, bucketbackup-rancher/rancher.ᐅ mkdir rancher-restore-16052024 ᐅ tar xvzf recurring-to-minio-df22cac6-f1a2-4bc9-9a89-068b733a848a-2024-05-15T22-00-00Z.tar.gz -C rancher-restore-16052024 ᐅ cd rancher-restore-16052024 ᐅ rm projectroletemplatebindings.management.cattle.io#v3/p-zsf5k/creator-project-owner.json ᐅ rm projectroletemplatebindings.management.cattle.io#v3/p-spm4t/creator-project-owner.json ᐅ rm projectroletemplatebindings.management.cattle.io#v3/p-t8mww/creator-project-owner.json ᐅ rm projectroletemplatebindings.management.cattle.io#v3/p-x7ls4/creator-project-owner.json ᐅ tar cvzf ../rancher-restore-16052024.tgz *Upload the new tarball to

https://minio-console.internal.admin.swh.network/, bucketbackup-rancher/.create a restore resource

restore-local.yamlapiVersion: resources.cattle.io/v1 kind: Restore metadata: name: restore-local spec: backupFilename: rancher-restore-16052024.tgz prune: false storageLocation: s3: credentialSecretName: rancher-backup-credentials credentialSecretNamespace: cattle-system bucketName: backup-rancher folder: '' endpoint: minio.admin.swh.networkdeploy restore resource

ᐅ kb apply -f restore-local.yamlᐅ stern -n cattle-resources-system rancher-backup [...] rancher-backup-9cd86b8d7-2xwj9 rancher-backup INFO[2024/05/16 15:11:41] Error getting object for controllerRef apps/v1/deployments/rancher: deployments.apps "rancher" not found rancher-backup-9cd86b8d7-2xwj9 rancher-backup INFO[2024/05/16 15:11:41] Done restoringEnsure the restore status is

Completed.ᐅ kb get restores NAME BACKUP-SOURCE BACKUP-FILE AGE STATUS restore-local S3 rancher-restore-16052024.tgz 3m58s Completeddelete restore resource

ᐅ kb delete restores restore-local restore.resources.cattle.io "restore-local" deleteddelete resources that cause installation errors

ᐅ kb delete priorityclass rancher-critical priorityclass.scheduling.k8s.io "rancher-critical" deleted ᐅ kb delete configmap rancher-config -n cattle-system configmap "rancher-config" deletedinstall cert-manager

ᐅ helm install cert-manager jetstack/cert-manager \ --namespace cert-manager \ --create-namespace \ --set installCRDs=trueinstall rancher v2.7.5

ᐅ helm install rancher rancher-stable/rancher \ --namespace cattle-system \ --set hostname=rancher.guisam.lan \ --version 2.7.5ᐅ kb -n cattle-system rollout status deploy/rancher Waiting for deployment "rancher" rollout to finish: 0 of 3 updated replicas are available... Waiting for deployment spec update to be observed... Waiting for deployment "rancher" rollout to finish: 0 of 3 updated replicas are available... Waiting for deployment "rancher" rollout to finish: 1 of 3 updated replicas are available... Waiting for deployment "rancher" rollout to finish: 2 of 3 updated replicas are available... deployment "rancher" successfully rolled outupdate rancher to latest version

ᐅ helm upgrade rancher rancher-stable/rancher \ --namespace cattle-system \ --set hostname=rancher.guisam.lanᐅ kb -n cattle-system rollout status deploy/rancher Waiting for deployment "rancher" rollout to finish: 2 out of 3 new replicas have been updated... Waiting for deployment "rancher" rollout to finish: 2 out of 3 new replicas have been updated... Waiting for deployment "rancher" rollout to finish: 2 out of 3 new replicas have been updated... Waiting for deployment "rancher" rollout to finish: 1 old replicas are pending termination... Waiting for deployment "rancher" rollout to finish: 1 old replicas are pending termination... Waiting for deployment "rancher" rollout to finish: 2 of 3 updated replicas are available... deployment "rancher" successfully rolled out - Vincent Sellier marked this issue as related to #5036 (closed)

marked this issue as related to #5036 (closed)

- Guillaume Samson mentioned in commit swh/infra/ci-cd/k8s-clusters-conf@b675c376

mentioned in commit swh/infra/ci-cd/k8s-clusters-conf@b675c376

- Owner

Rancher is now in version 2.8.4:

ᐅ for pod in $(kb --context local get pods -n cattle-system -l app=rancher -o name) do kb --context local describe -n cattle-system "$pod" | \ awk '/^([[:space:]]*Image|Name|Status):/' done Name: rancher-58994f549-8s66c Status: Running Image: rancher/rancher:v2.8.4 Name: rancher-58994f549-z28lp Status: Running Image: rancher/rancher:v2.8.4 - Guillaume Samson closed

closed